SLURM Multinode on Alces Cloud

Launch Login Node

Prepare User Data

When launching a login node it is worth considering what user data options to provide. While it is not required, user data can provide powerful customisation at launch that can further streamline the cluster build process.

There are several options that can be added to change how a compute node will contact nodes on startup.

- Sharing public ssh key to clients:

- Instead of manually obtaining and sharing the root public SSH key (passwordless root ssh is required for flight profile) this can be shared over the local network with

SHAREPUBKEY=true

- Instead of manually obtaining and sharing the root public SSH key (passwordless root ssh is required for flight profile) this can be shared over the local network with

- Add an auth key:

- Add the line

AUTH_KEY=<string>. This means that the node will only accept incoming flight hunter nodes that provide a matching authorisation key

- Add the line

#cloud-config

write_files:

- content: |

SHAREPUBKEY=true

AUTH_KEY=banana

path: /opt/flight/cloudinit.in

permissions: '0600'

owner: root:root

Info

More information on available user data options for Flight Solo via the user data documentation

Deploy

To set up a cluster, you will need to import a Flight Solo image.

Before setting up a cluster on Alces Cloud, there are several required prerequisites:

- Your own keypair

- A network with a subnet and a router bridging the subnet to the external network

- A security group that allows traffic is given below (if creating the security group through the web interface then the "Any" protocol will need to be an "Other Protocol" rule with "IP Protocol" of

-1)

| Protocol | Direction | CIDR | Port Range |

|---|---|---|---|

| Any | egress | 0.0.0.0/0 | any |

| Any | ingress | Virtual Network CIDR | any |

| ICMP | ingress | 0.0.0.0/0 | any |

| SSH | ingress | 0.0.0.0/0 | 22 |

| TCP | ingress | 0.0.0.0/0 | 80 |

| TCP | ingress | 0.0.0.0/0 | 443 |

| TCP | ingress | 0.0.0.0/0 | 5900-5903 |

Note

The "Virtual Network CIDR" is the subnet and netmask for the network that the nodes are using. For example, a node on the 11.11.11.0 network with a netmask of 255.255.255.0 would have a network CIDR of 11.11.11.0/24.

-

Launch a login node with a command similar to the following:

$ openstack server create --flavor p1.small \ --image "Flight Solo VERSION" \ --boot-from-volume 16 \ --network "mycluster1-network" \ --key-name "MyKey" \ --security-group "mycluster1-sg" \ --user-data myuserdata.yml \ login1-

Where:

flavor- Is the desired size of the instanceimage- Is the Flight Solo image imported to Alces Cloudboot-from-volume- Is the size of the system disk in GBnetwork- Is the name or ID of the network created for the clusterkey-name- Is the name of the SSH key to usesecurity-group- Is the name or ID of the security group created previouslyuser-data- Is the file containing cloud-init user-data (this is optional in standalone scenarios)login1- Is the name of the system

-

-

Associate a floating IP, either by using an existing one or creating a new one

-

To use an existing floating IP

-

Identify the IP address of an available floating IP (

Portwill beNone)$ openstack floating ip list +---------------------+---------------------+------------------+---------------------+---------------------+-----------------------+ | ID | Floating IP Address | Fixed IP Address | Port | Floating Network | Project | +---------------------+---------------------+------------------+---------------------+---------------------+-----------------------+ | 726318f4-4dbb-4d51- | 10.199.31.6 | None | None | c681d94b-e2ec-4b73- | dcd92da7538a4f64a42b0 | | b119-d9e53c47a9f5 | | | | 89bf-9943bcce3255 | d4d9ce8845f | -

Associate the floating IP with the instance

$ openstack server add floating ip login1 10.199.31.6

-

-

To create a new floating IP

-

Create new floating IP and note the

floating_ip_address$ openstack floating ip create external1 -

Associate the floating IP with the instance (using the

floating_ip_addressfrom the previous output)$ openstack server add floating ip login1 10.199.31.212

-

-

Further detail on collecting the information from the above can be found in the Alces Cloud documentation.

To set up a cluster, you will need to import a Flight Solo image.

Before setting up a cluster on Alces Cloud, there are several required prerequisites:

- Your own keypair

- A network with a subnet and a router bridging the subnet to the external network

- A security group that allows traffic is given below (if creating the security group through the web interface then the "Any" protocol will need to be an "Other Protocol" rule with "IP Protocol" of

-1)

| Protocol | Direction | CIDR | Port Range |

|---|---|---|---|

| Any | egress | 0.0.0.0/0 | any |

| Any | ingress | Virtual Network CIDR | any |

| ICMP | ingress | 0.0.0.0/0 | any |

| SSH | ingress | 0.0.0.0/0 | 22 |

| TCP | ingress | 0.0.0.0/0 | 80 |

| TCP | ingress | 0.0.0.0/0 | 443 |

| TCP | ingress | 0.0.0.0/0 | 5900-5903 |

Note

The "Virtual Network CIDR" is the subnet and netmask for the network that the nodes are using. For example, a node on the 11.11.11.0 network with a netmask of 255.255.255.0 would have a network CIDR of 11.11.11.0/24.

To set up a cluster:

-

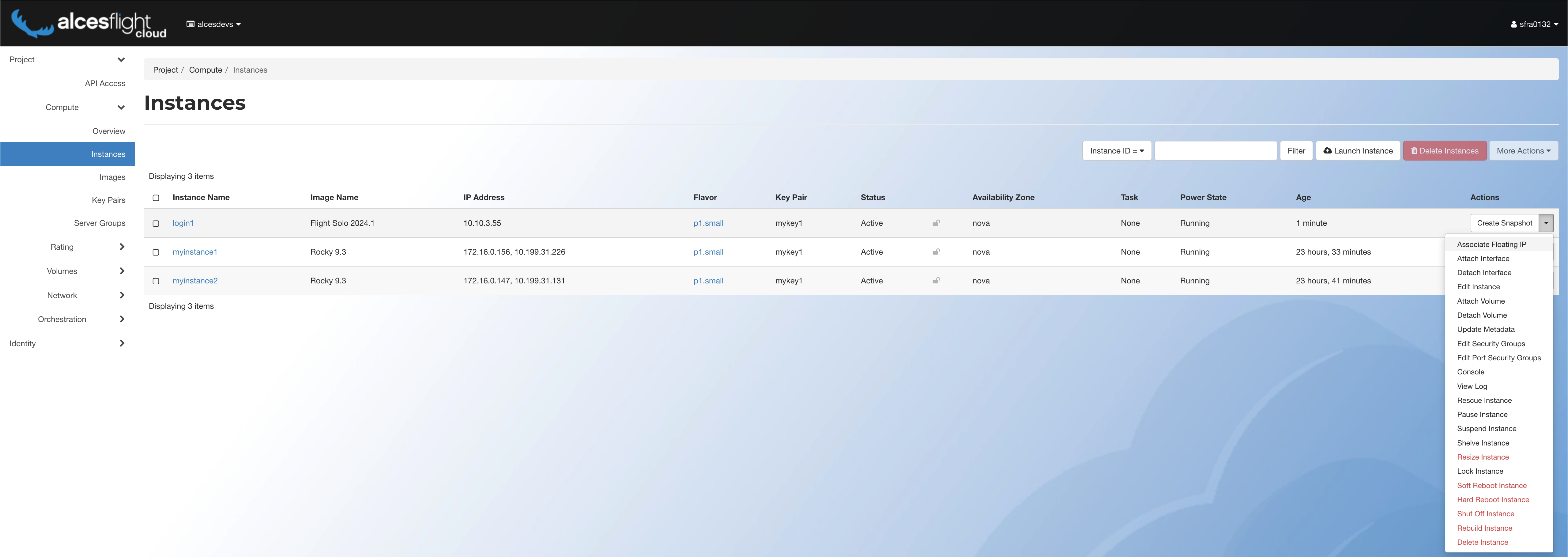

Go to the Alces Cloud instances page.

-

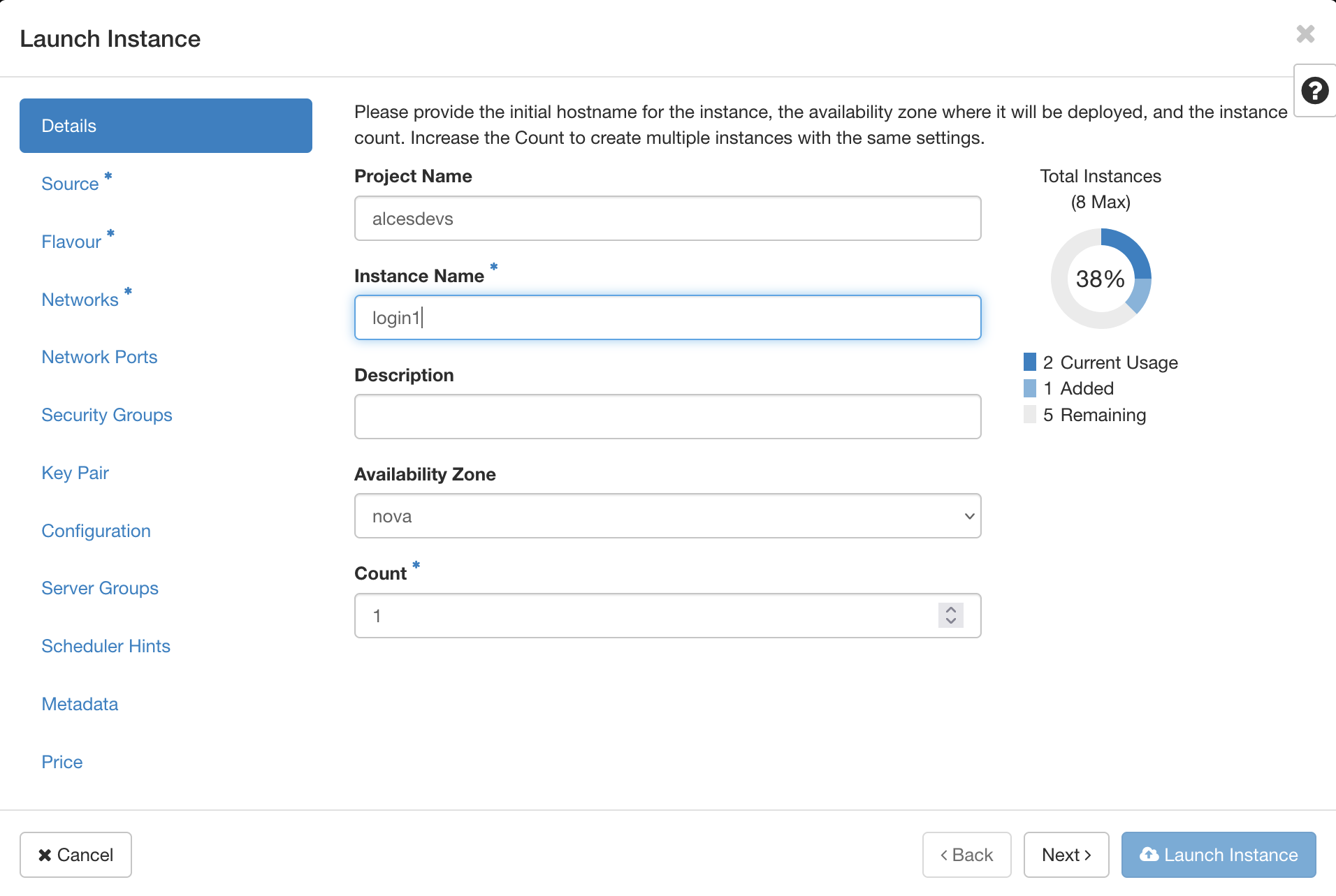

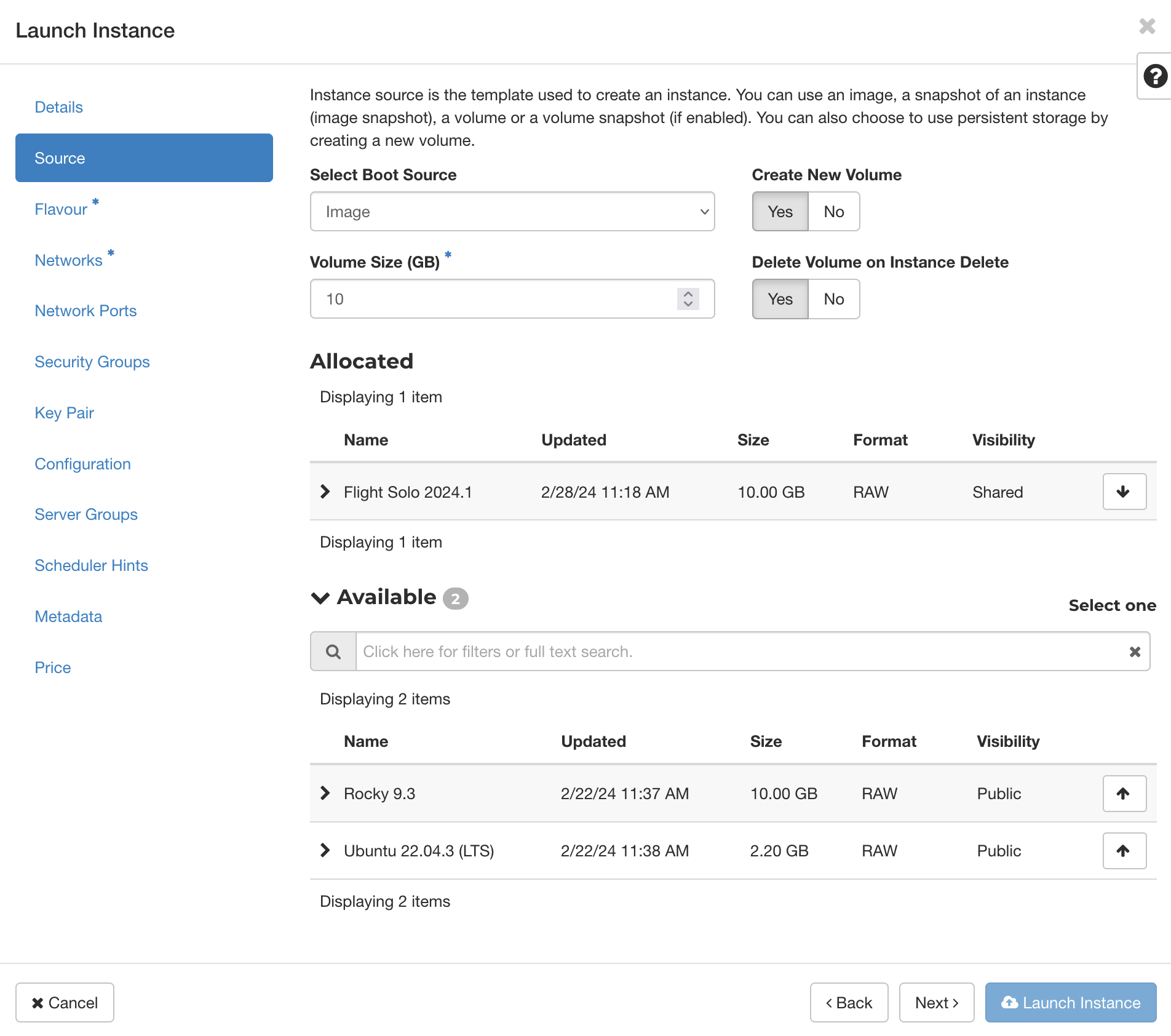

Click "Launch Instance", and the instance creation window will pop up.

-

Fill in the instance name, and leave the number of instances as 1, then click next.

-

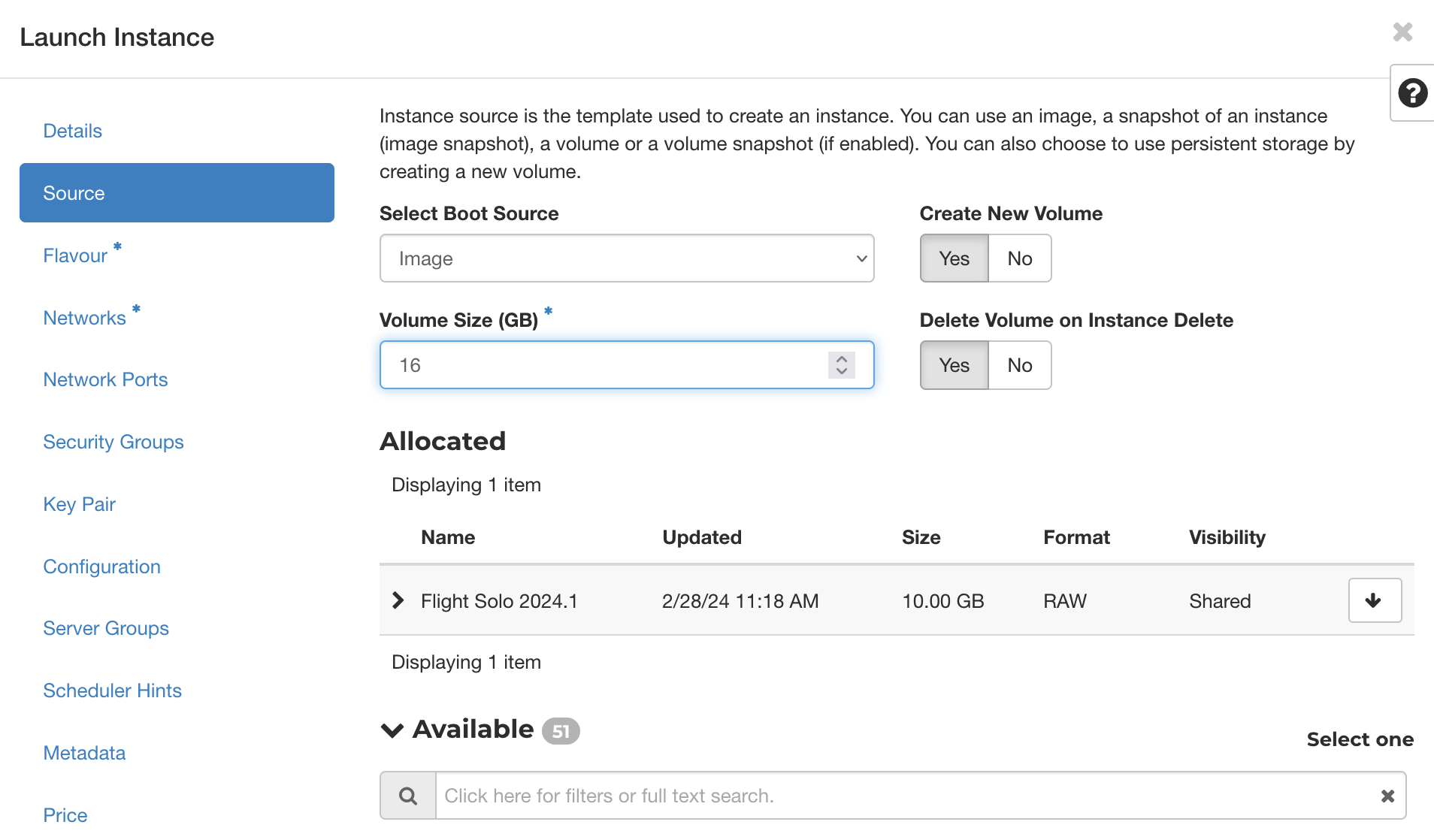

Choose the desired image to use by clicking the up arrow at the end of its row. It will be displayed in the "Allocated" section when selected.

-

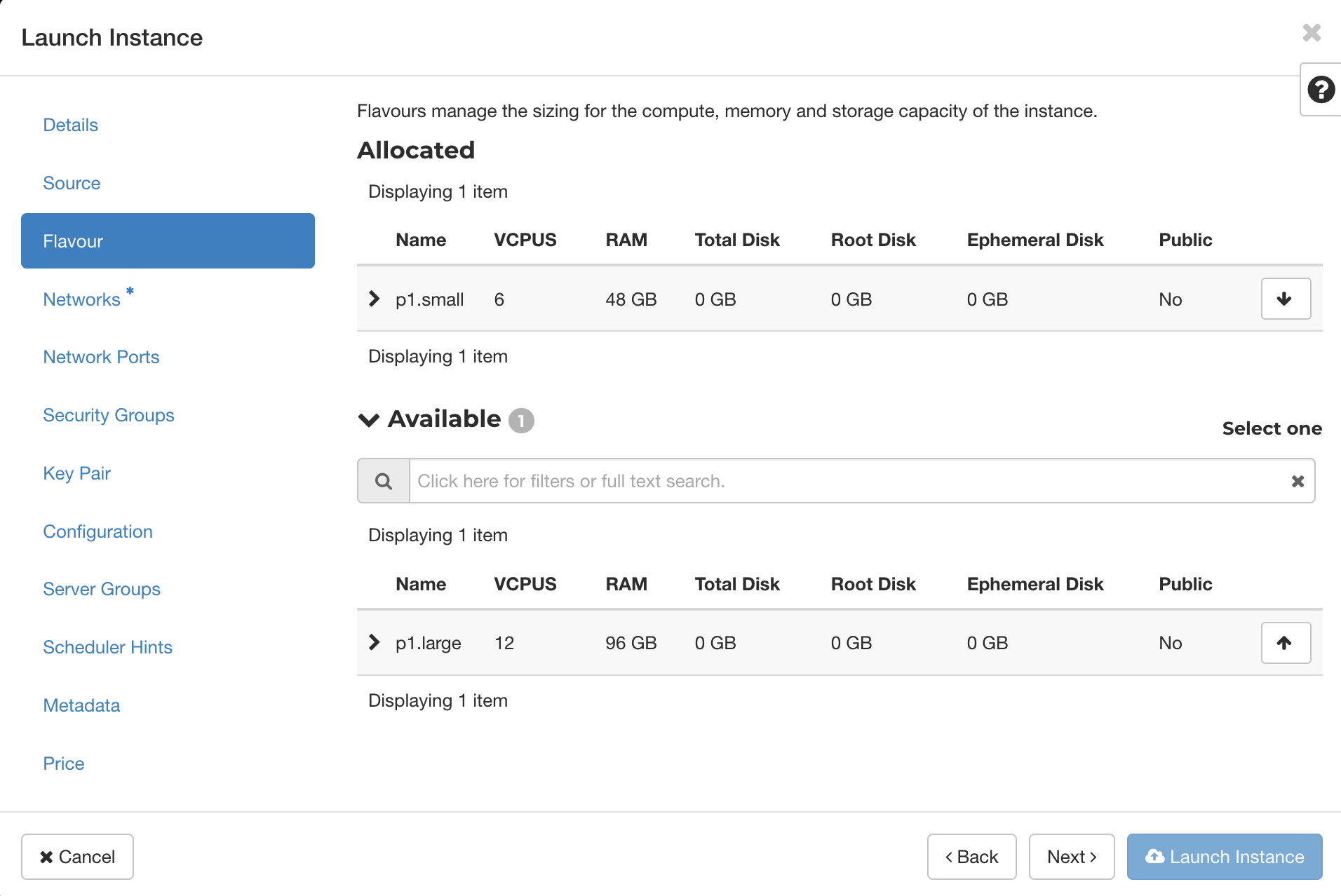

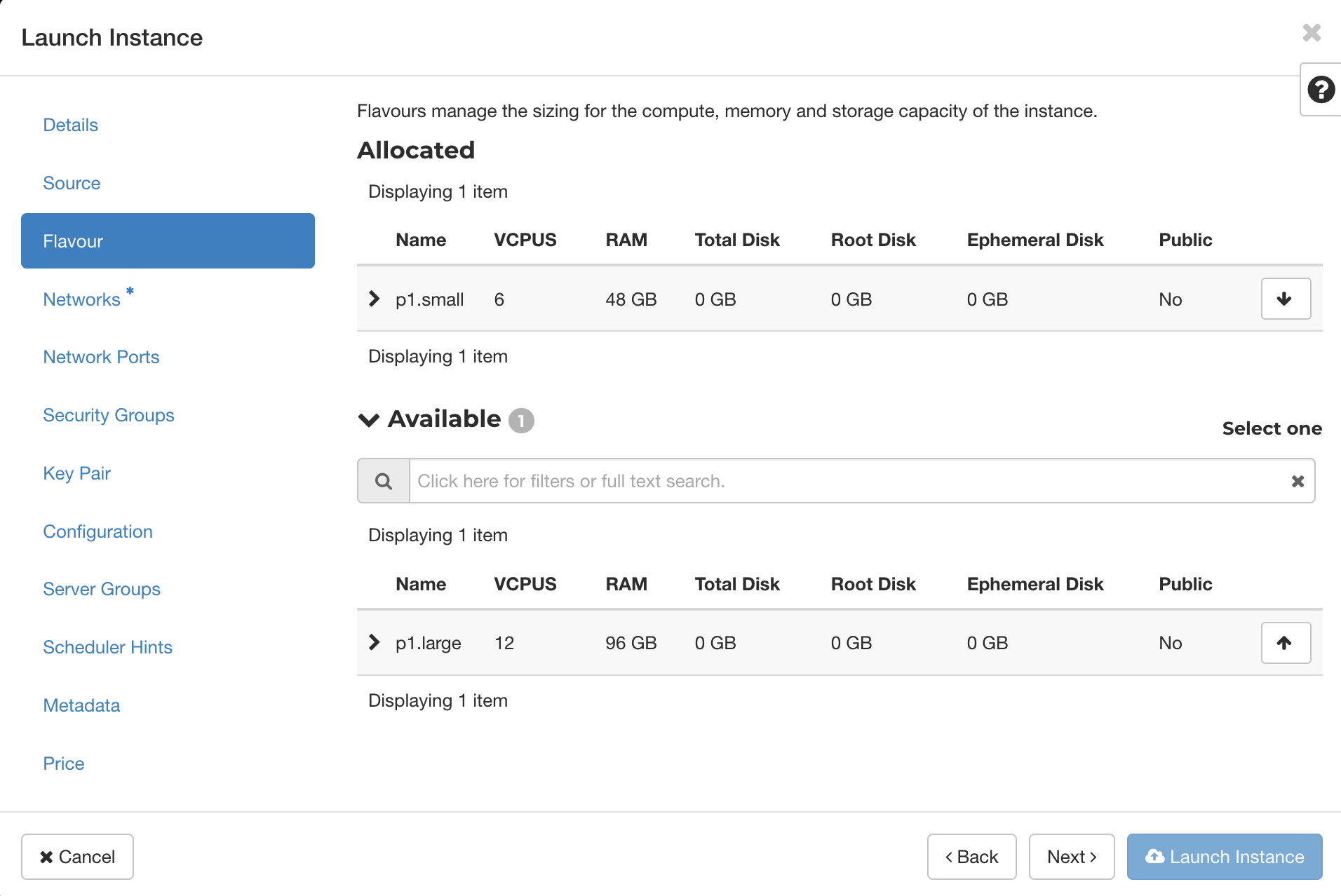

Choose the desired instance size by clicking the up arrow at the end of its row. It will be displayed in the "Allocated" section when selected.

-

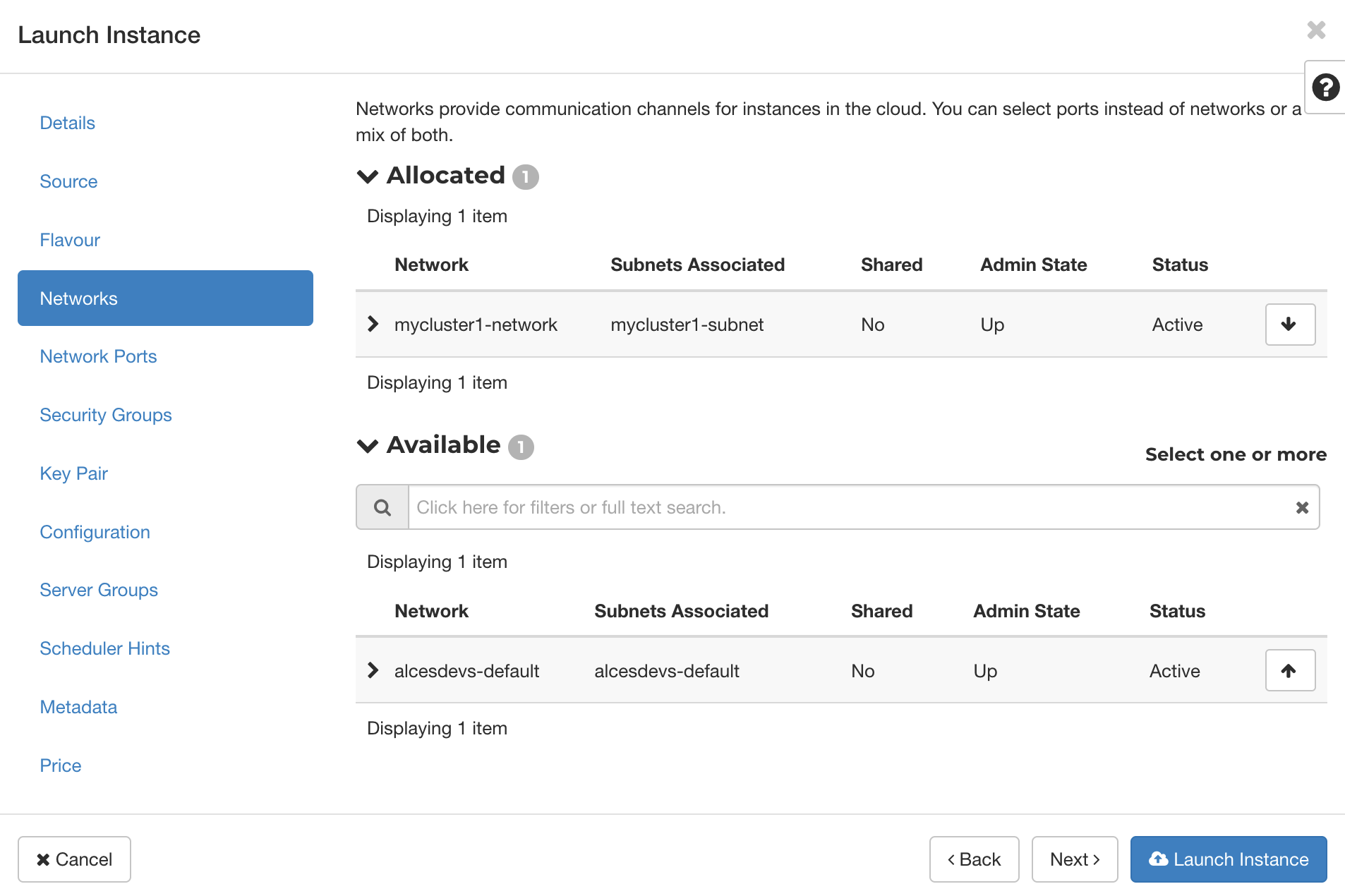

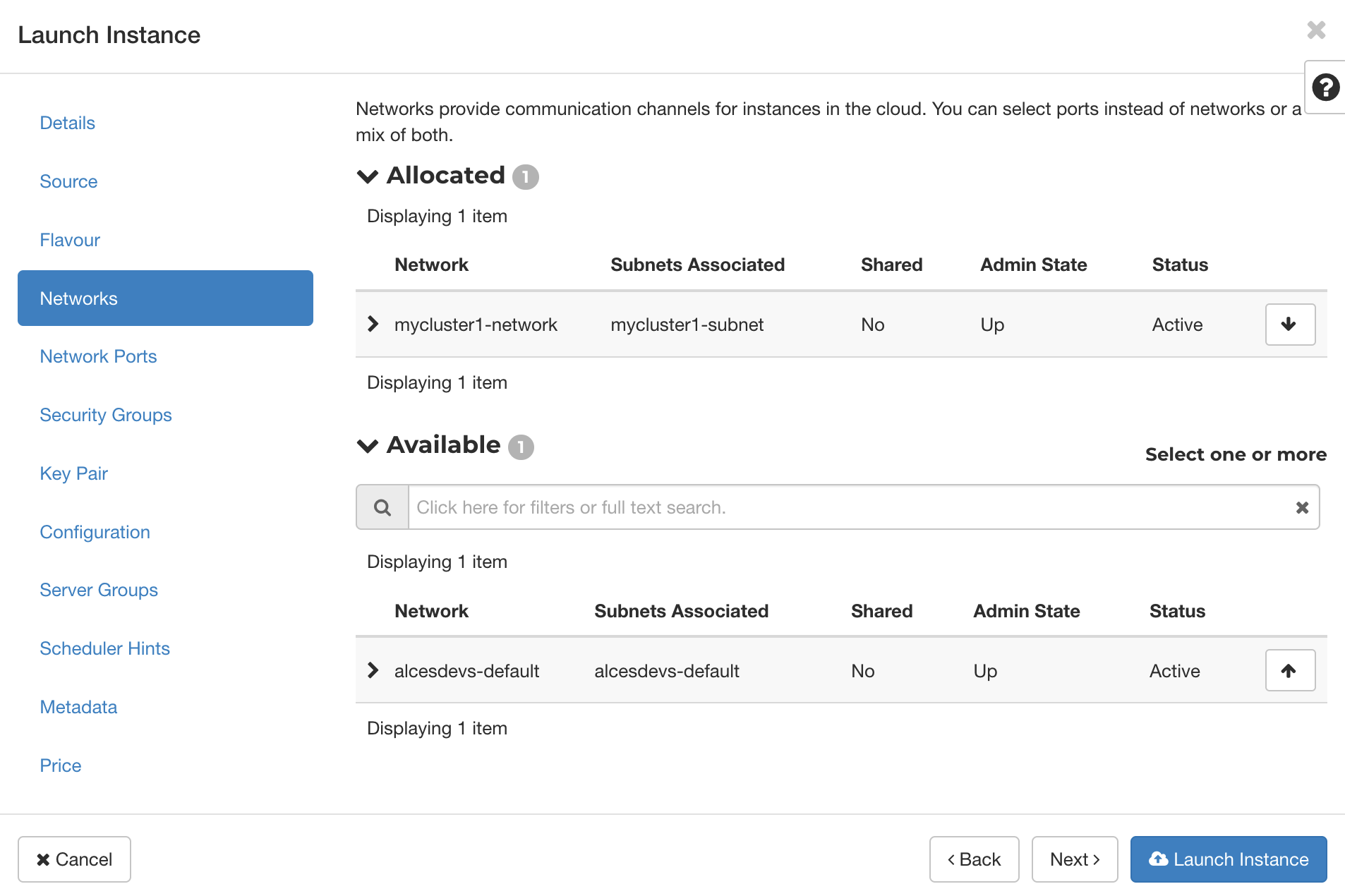

Choose a network in the same way as an image or instance size. Note that all nodes in a cluster must be on the same network.

-

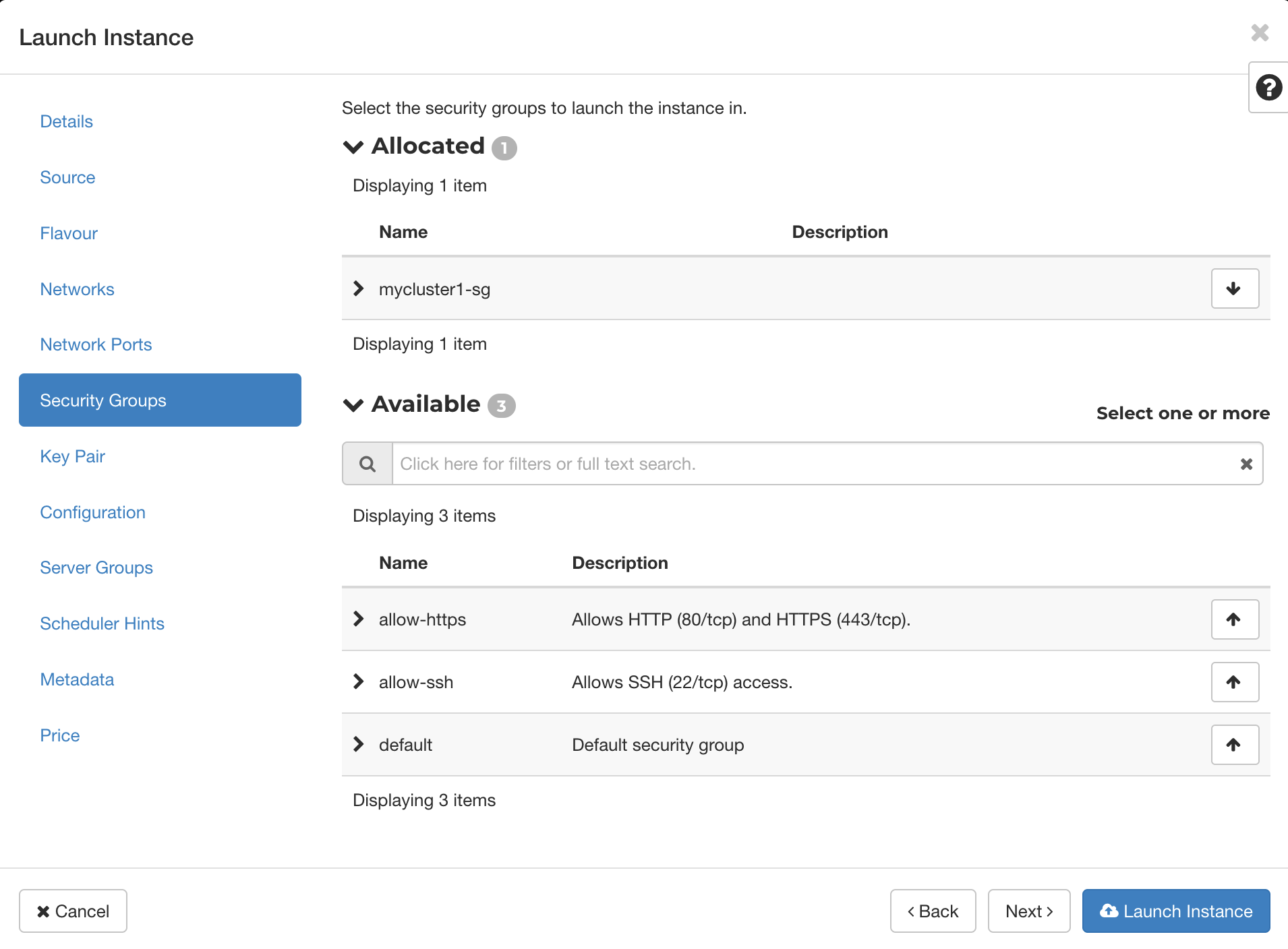

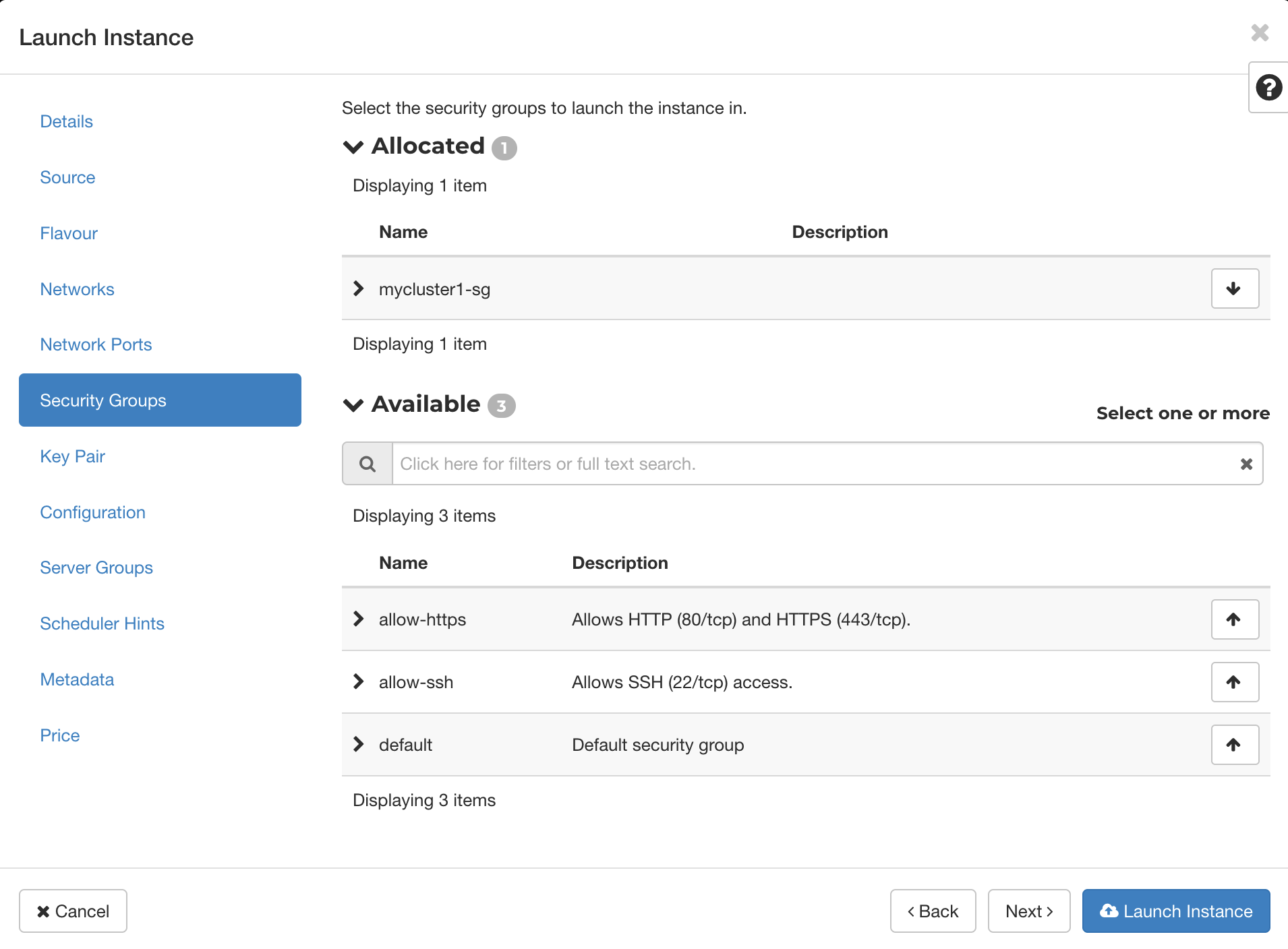

Choose a security group in the same way as an image or instance size. Note that all nodes in a cluster must be in the same security group.

-

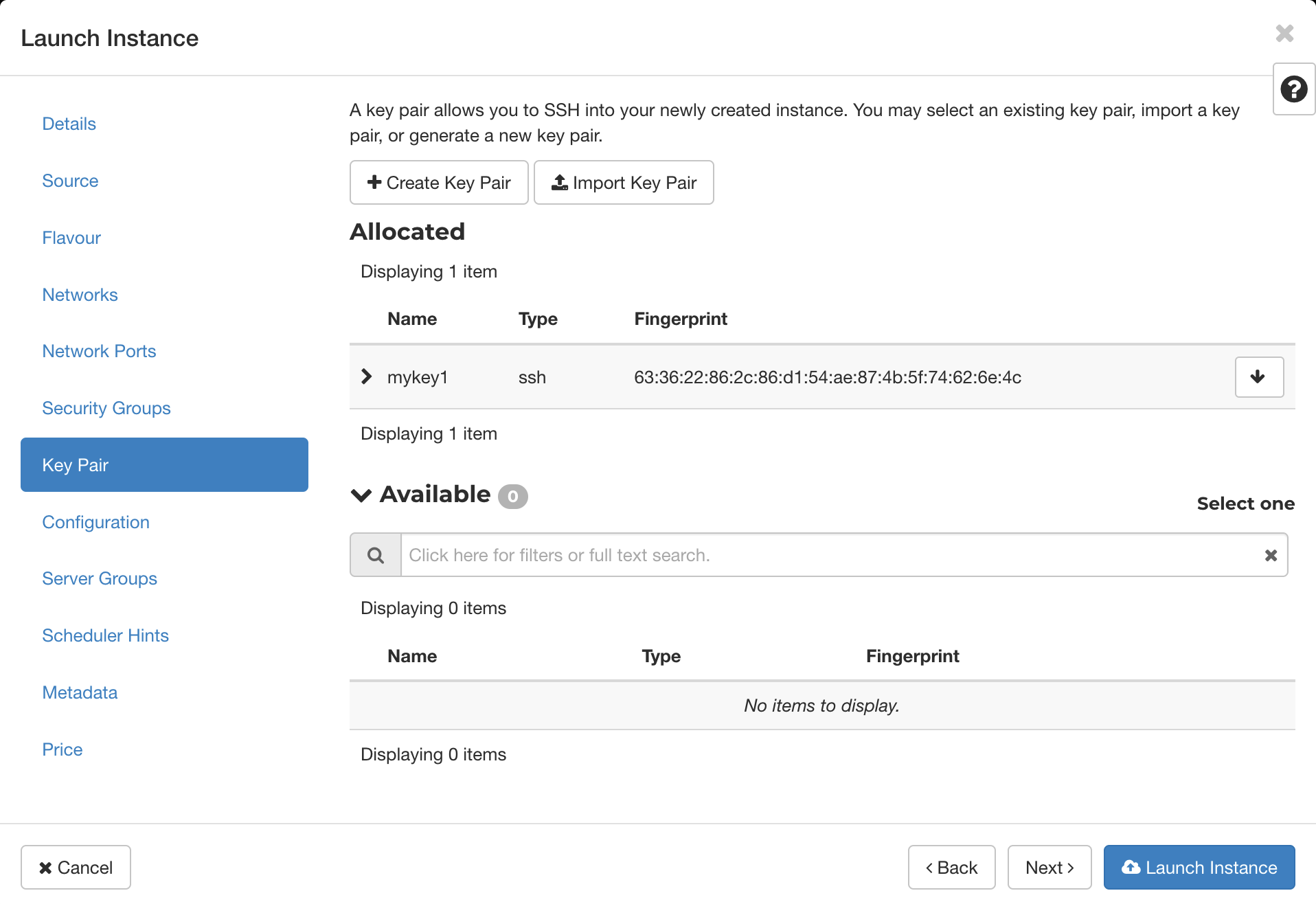

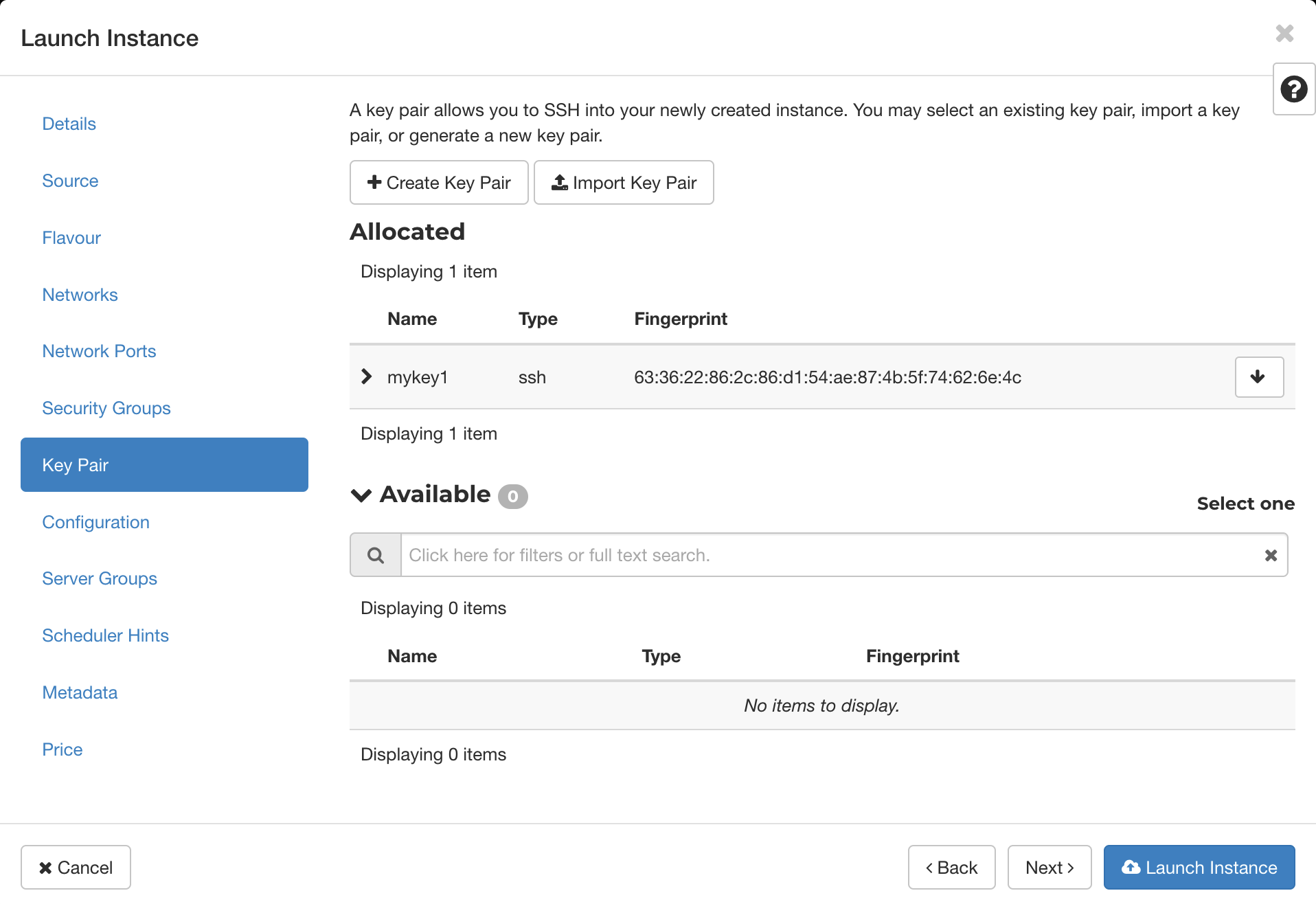

Choose the keypair in the same way as an image or instance size.

-

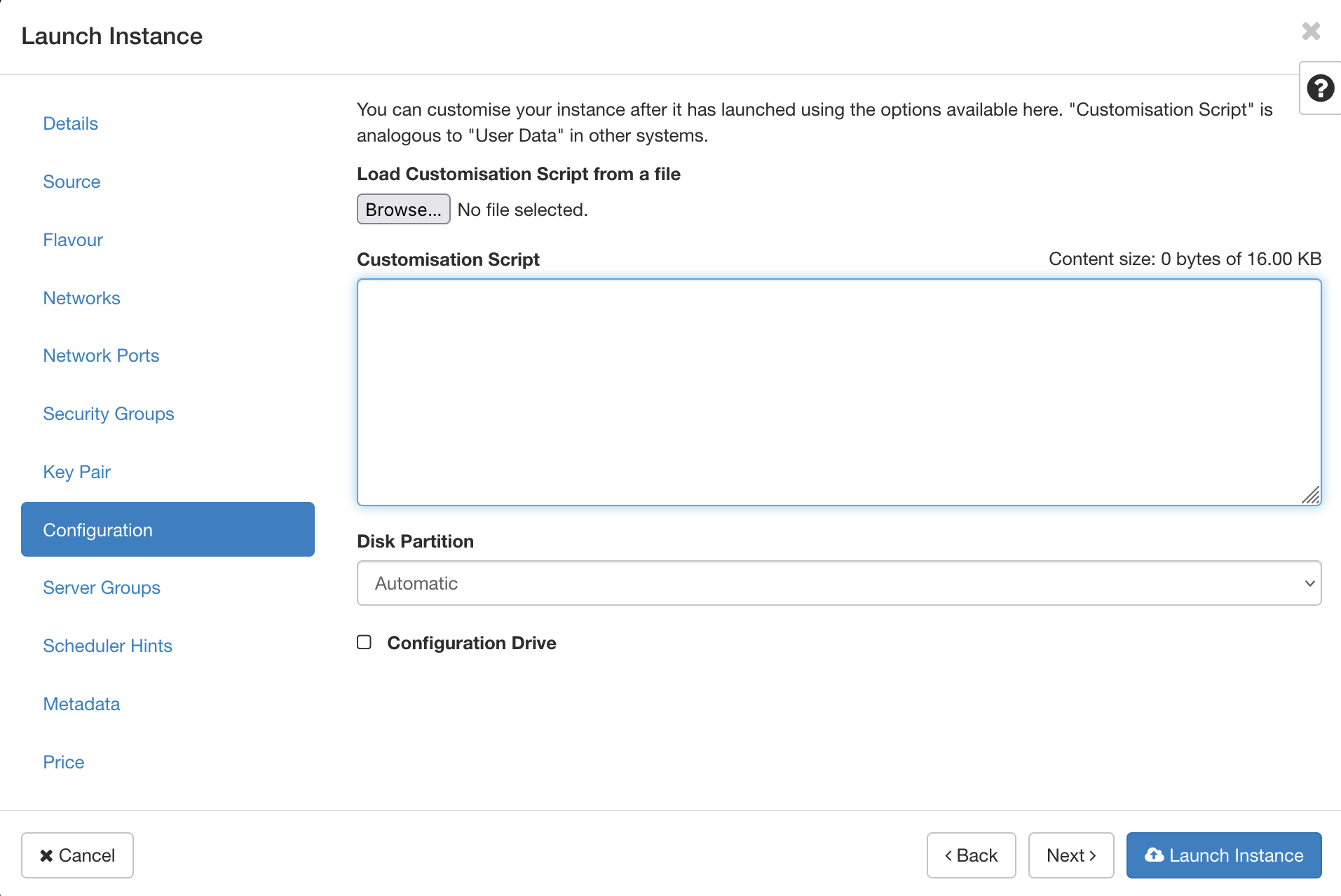

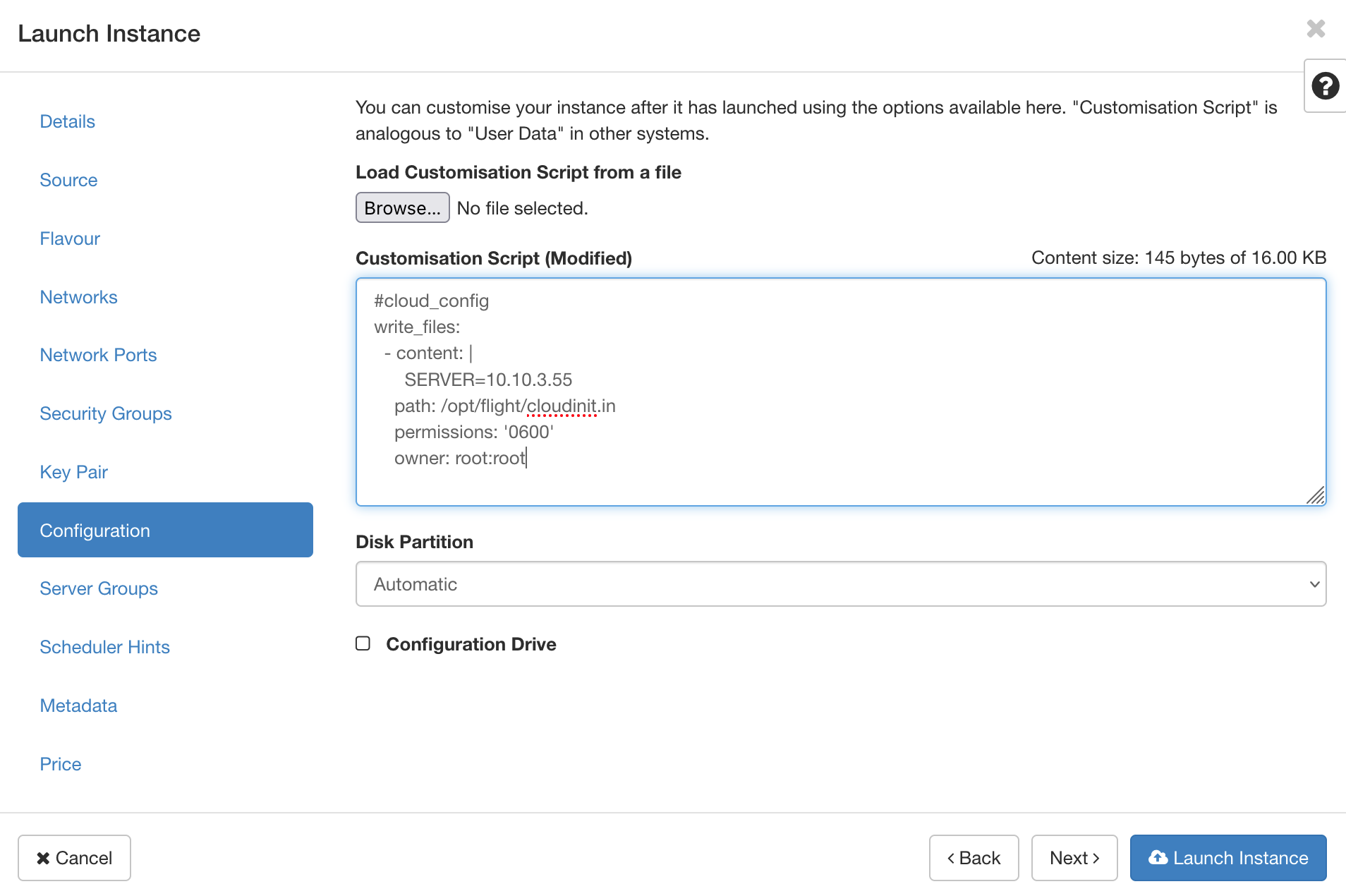

In the "Configuration" section, there is a "Customisation Script" section with a text box. This will be used to set your user data

-

When all options have been selected, press the "Launch Instance" button to launch. If the button is greyed out, then a mandatory setting has not been configured.

-

Go to the "Instances" page in the "Compute" section. The created node should be there and be finishing or have finished creation.

-

Click on the down arrow at the end of the instance row. This will bring up a drop-down menu.

-

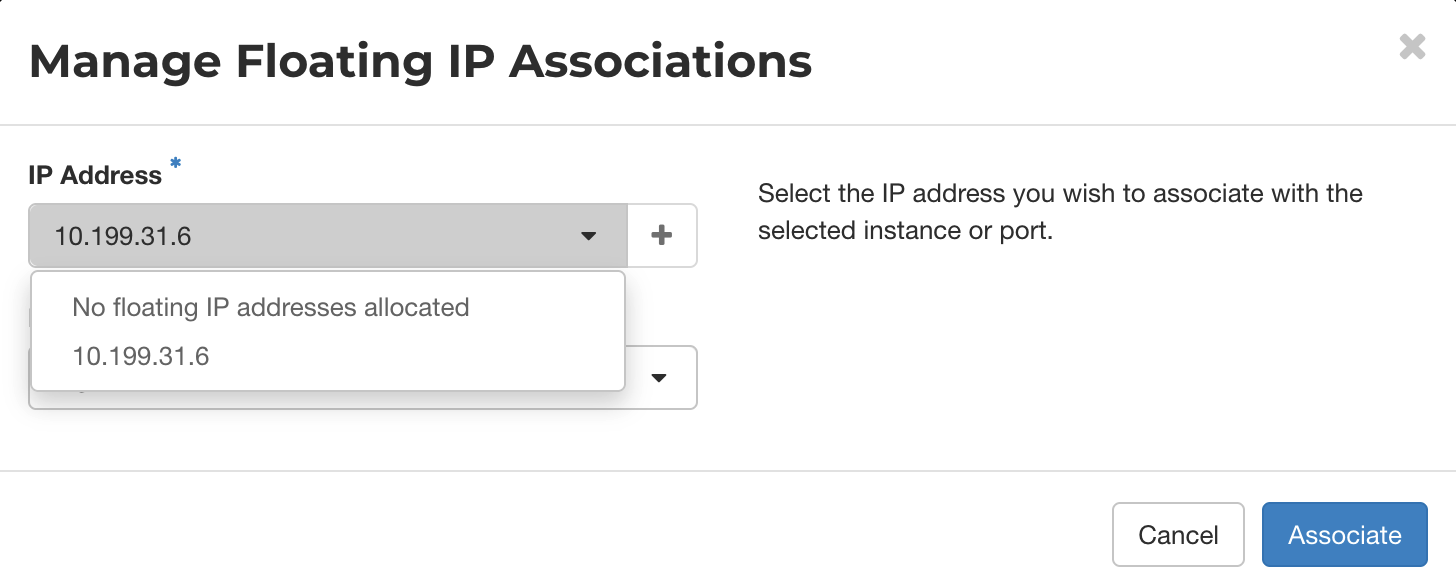

Select "Associate Floating IP", this will make the ip management window pop up.

-

Associate a floating IP, either by using an existing one or allocating a new one.

-

To use an existing floating IP:

-

Open the IP Address drop-down menu.

-

Select one of the IP Addresses.

-

Click "Associate" to finish associating an IP.

-

-

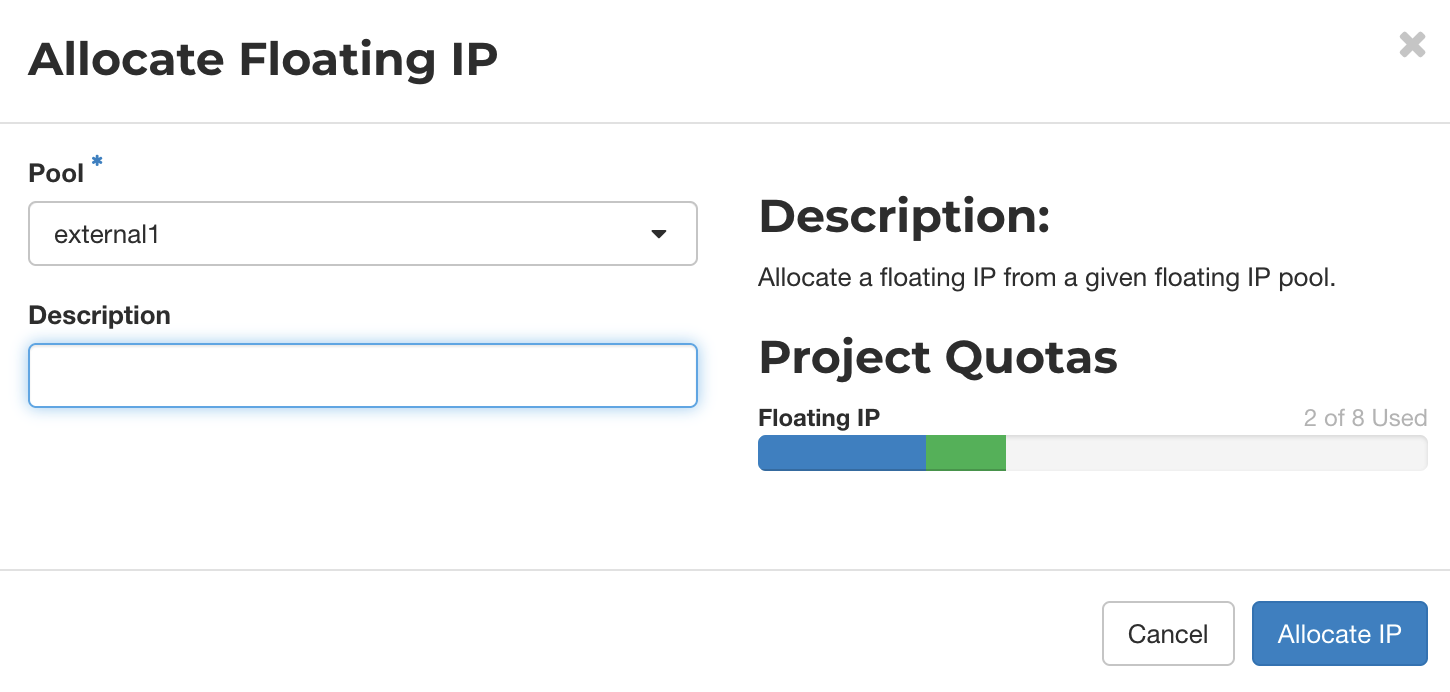

To allocate a new floating IP:

-

Click the "+" next to the drop-down arrow to open the allocation menu.

-

Click "Allocate IP".

-

-

-

Click "Associate" to finish associating an IP.

Further detail on collecting the information from the above can be found in the Alces Cloud documentation.

Launch Compute Nodes

Prepare User Data

Setting up compute nodes is done slightly differently than a login node. The basic steps are the same except subnets, networks and security groups need to match the ones used for the login node.

This is the smallest amount of cloud init data necessary. It allows the login node to find the compute nodes as long as they are on the same network, and ssh into them from the root user (which is necessary for setup).

#cloud-config

users:

- default

- name: root

ssh_authorized_keys:

- <Content of ~/.ssh/id_alcescluster.pub from root user on login node>

Tip

The above is not required if the SHAREPUBKEY option was provided to the login node. If this was the case then the SERVER option provided to the compute node will be enough to enable root access from the login node.

There are several options that can be added to change how a compute node will contact nodes on startup.

- Sending to a specific server:

- Instead of broadcasting across a range, add the line

SERVER=<private server IP>to send to specifically that node, which would be your login node.

- Instead of broadcasting across a range, add the line

- Add an auth key:

- Add the line

AUTH_KEY=<string>. This means that the compute node will send it's flight hunter packet with this key. This must match the auth key provided to your login node

- Add the line

#cloud-config

write_files:

- content: |

SERVER=10.10.0.1

AUTH_KEY=banana

path: /opt/flight/cloudinit.in

permissions: '0600'

owner: root:root

users:

- default

- name: root

ssh_authorized_keys:

- <Content of ~/.ssh/id_alcescluster.pub from root user on login node>

Info

More information on available user data options for Flight Solo via the user data documentation

Deploy

-

Launch a compute node with a command similar to the following:

$ openstack server create --flavor p1.small \ --image "Flight Solo VERSION" \ --boot-from-volume 10 \ --network "mycluster1-network" \ --key-name "MyKey" \ --security-group "mycluster1-sg" \ --user-data myuserdata.yml \ --min 2 \ --max 2 \ node-

Where:

flavor- Is the desired size of the instanceimage- Is the Flight Solo image imported to Alces Cloudboot-from-volume- Is the size of the system disk in GBnetwork- Is the name or ID of the network created for the clusterkey-name- Is the name of the SSH key to usesecurity-group- Is the name or ID of the security group created previouslyuser-data- Is the file containing cloud-init user-dataminandmax- Is the number of nodes to launchnode- Is the name of the deployment to have numbers appended to (e.g. this example createsnode-1andnode-2)

-

Further detail on collecting the information from the above can be found in the Alces Cloud documentation.

-

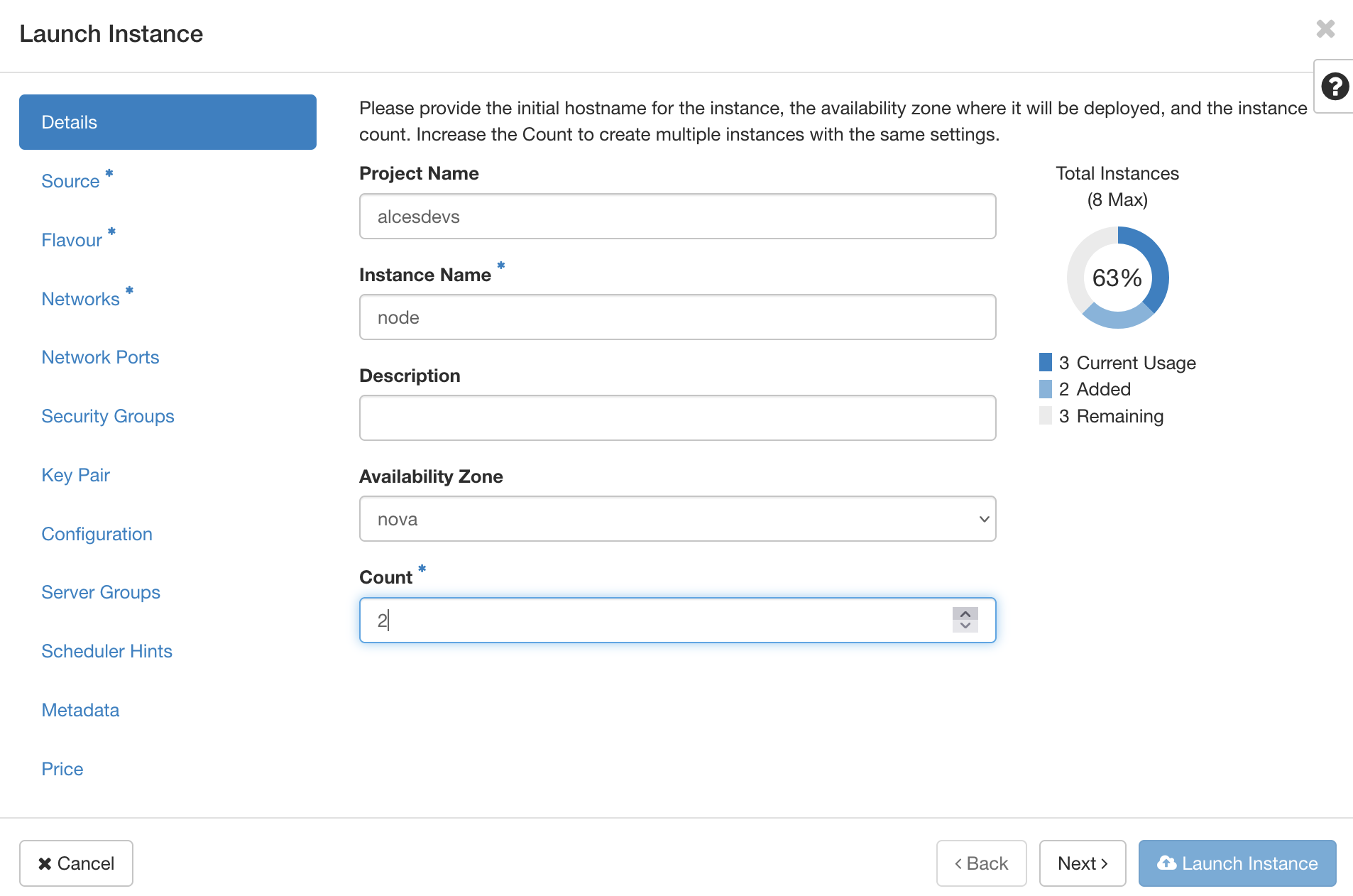

Go to the Alces Cloud instances page.

-

Click "Launch Instance", and the instance creation window will pop up.

-

Fill in the instance name, and set the number of instances to create, then click next.

-

Choose the desired image to use by clicking the up arrow at the end of its row. It will be displayed in the "Allocated" section when selected.

-

Choose the desired instance size by clicking the up arrow at the end of its row. It will be displayed in the "Allocated" section when selected.

-

Choose a network in the same way as an image or instance size. Note that this should be the same network as the login node.

-

Choose a security group in the same way as an image or instance size. Note that this should be the same security group as the login node.

-

Choose the keypair in the same way as an image or instance size.

-

In the "Configuration" section, there is a "Customisation Script" section with a text box. This will be used to set user data script prepared earlier

-

When all options have been selected, press the "Launch Instance" button to launch. If the button is greyed out, then a mandatory setting has not been configured.

General Configuration

Create Node Inventory

-

Parse your node(s) with the command

flight hunter parse.-

This will display a list of hunted nodes, for example

[flight@login-node.novalocal ~]$ flight hunter parse Select nodes: (Scroll for more nodes) ‣ ⬡ login-node.novalocal - 10.10.0.1 ⬡ compute-node-1.novalocal - 10.10.101.1 -

Select the desired node to be parsed with Space, and you will be taken to the label editor

Choose label: login-node.novalocal -

Here, you can edit the label like plain text

Choose label: login1Tip

You can clear the current node name by pressing Down in the label editor.

-

When done editing, press Enter to save. The modified node label will appear next to the ip address and original node label.

Select nodes: login-node.novalocal - 10.10.0.1 (login1) (Scroll for more nodes) ‣ ⬢ login-node.novalocal - 10.10.0.1 (login1) ⬡ compute-node-1.novalocal - 10.10.101.1 -

From this point, you can either hit Enter to finish parsing and process the selected nodes, or continue changing nodes. Either way, you can return to this list by running

flight hunter parse. -

Save the node inventory before moving on to the next step.

Tip

See

flight hunter parse -hfor more ways to parse nodes.

-

Add genders

- Optionally, you may add genders to the newly parsed node. For example, in the case that the node should have the gender

clusterandallthen run the command:flight hunter modify-groups --add cluster,all login1

SLURM Multinode Configuration

-

Configure profile

flight profile configure- This brings up a UI, where several options need to be set. Use up and down arrow keys to scroll through options and enter to move to the next option. Options in brackets coloured yellow are the default options that will be applied if nothing is entered.

- Cluster type: The type of cluster setup needed, in this case

Slurm Multinode. - Cluster name: The name of the cluster.

- Setup Multi User Environment with IPA?: Boolean value to determine whether to configure a multi-user environment with IPA. If set to true then the following will need to be filled in

- IPA domain: The domain for the IPA server to use.

- IPA secure admin password: The password to be used by the

adminuser of the IPA installation to manage the server.

- Default user: The user that you log in with.

- Set user password: Set a password to be used for the chosen default user.

- IP or FQDN for Web Access: As described here, this could be the public IP or public hostname.

- IP range of compute nodes: The IP range of the compute nodes used, remember to add the netmask. E.g.

172.31.16.0/20

- Cluster type: The type of cluster setup needed, in this case

- This brings up a UI, where several options need to be set. Use up and down arrow keys to scroll through options and enter to move to the next option. Options in brackets coloured yellow are the default options that will be applied if nothing is entered.

-

Apply identities by running the command

flight profile apply-

First apply an identity to the login node

flight profile apply login1 login -

Wait for the login node identity to finish applying. You can check the status of all nodes with

flight profile list.Tip

You can watch the progress of the application with

flight profile view login1 --watch -

Apply an identity to the each of the compute nodes (in this example, genders-style syntax is used to apply to

node01andnode02)flight profile apply node[01-02] computeTip

You can check all available identities for the current profile with

flight profile identities

-

Success

Congratulations, you've now created a SLURM Multinode environment! Learn more about SLURM in the HPC Environment docs.

Verifying Functionality

-

Create a file called

simplejobscript.sh, and copy this into it:#!/bin/bash -l echo "Starting running on host $HOSTNAME" sleep 30 echo "Finished running - goodbye from $HOSTNAME" -

Run the script with

sbatch simplejobscript.sh, and to test all your nodes try queuing up enough jobs that all nodes will have to run. -

In the directory that the job was submitted from there should be a

slurm-X.outwhereXis the Job ID returned from thesbatchcommand. This will contain the echo messages from the script created in step 1